<aside>

</aside>

<aside> 📍

You are not lost.

You have arrived at a sub-page in the AIxDESIGN Archive.

This is a public repository – our way of working in the open and sharing as we go.

Have fun!

</aside>

<aside>

INDEX

</aside>

Since 2023, AIxDESIGN has been holding the idea of Slow AI and throughout 2024, we dove deep with research, community events, and artist collaborations to expand on what this might mean.

This blog serves as an introduction to Slow AI:

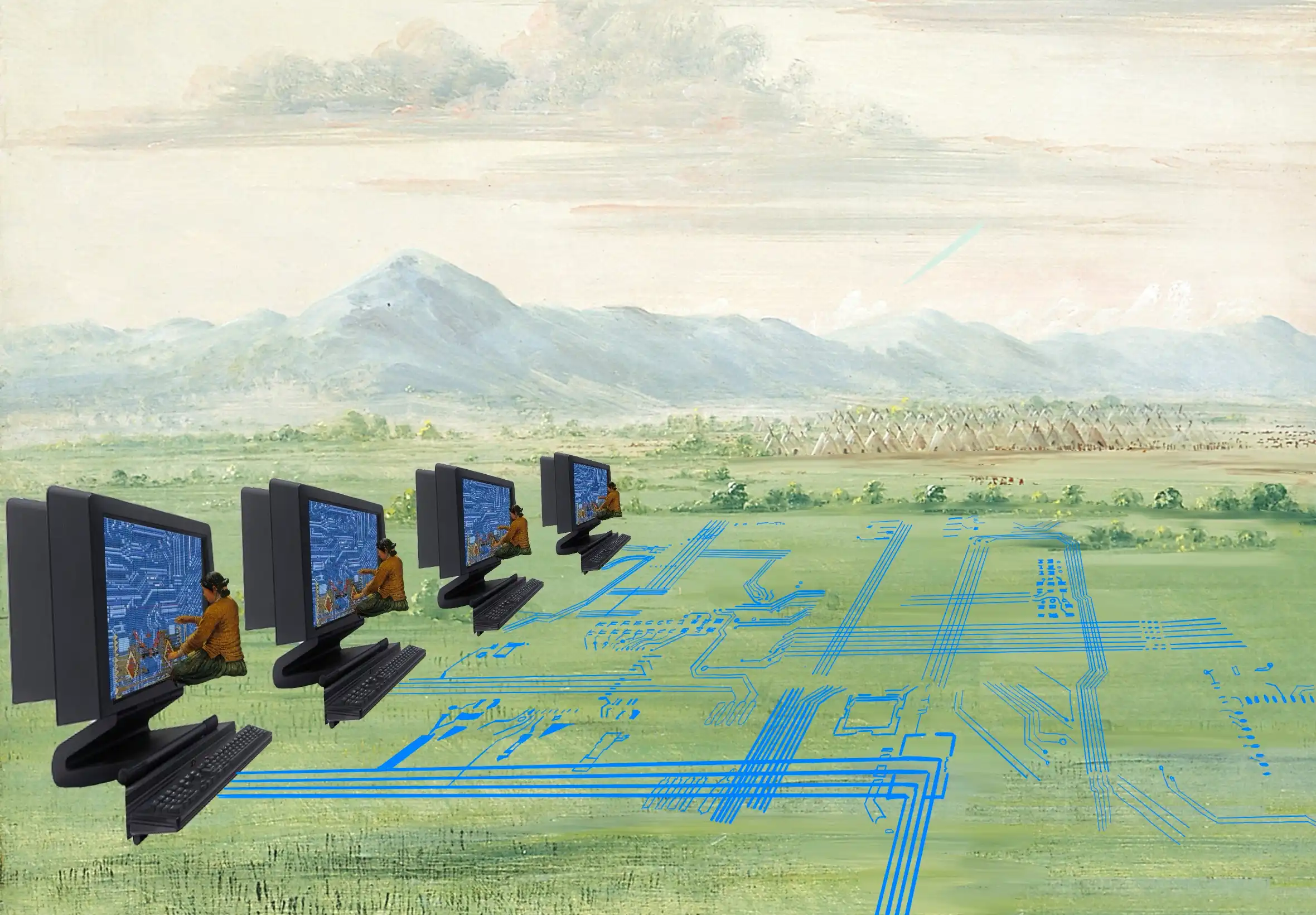

IMG: Weaving Wires, Hanna Barakat & Archival Images of AI + AIxDESIGN, 2024

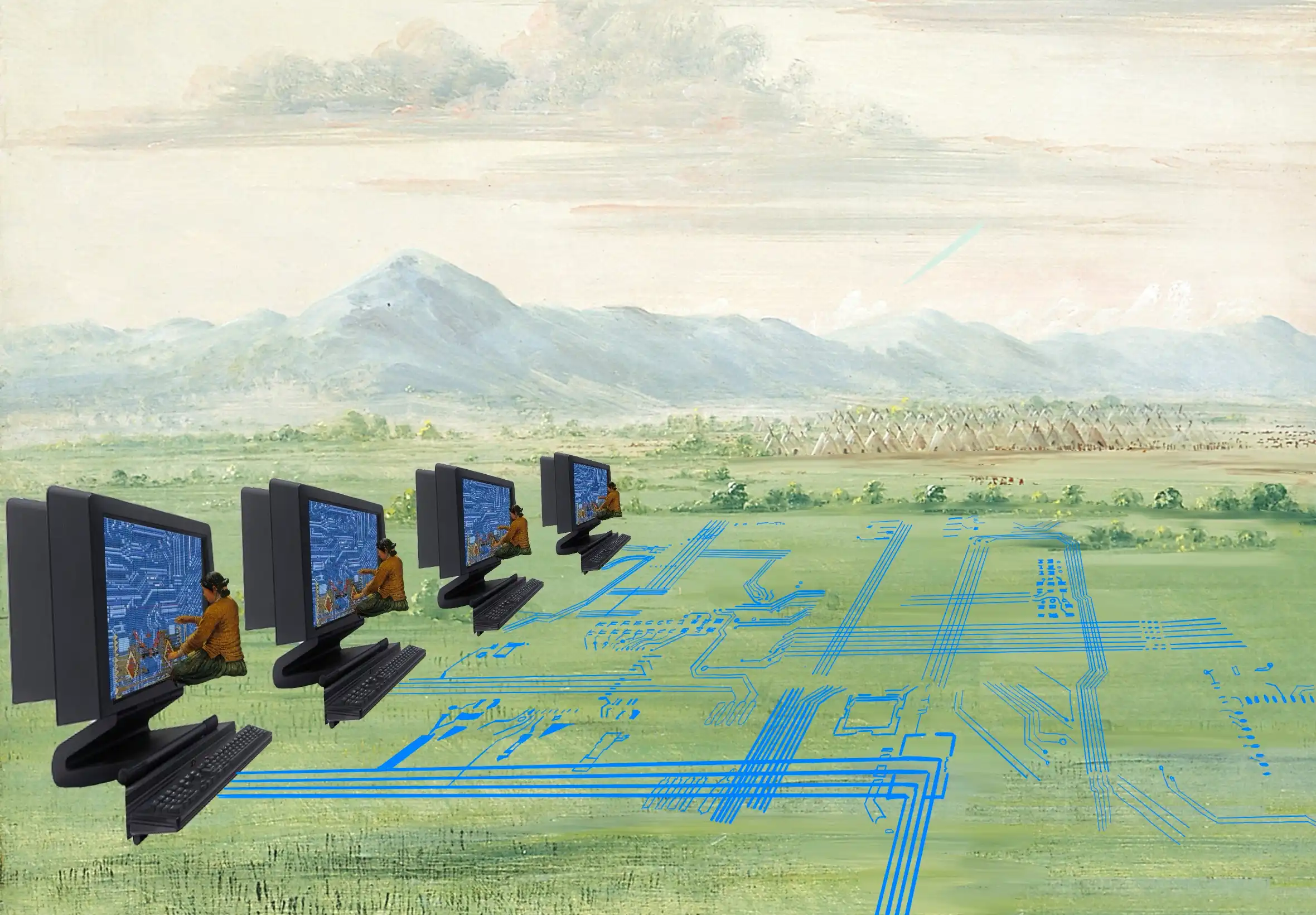

IMG: Ben Grosser’s redaction poetry version of Andreessen’s Techno-Optimist Manifesto

Throughout 2023, we in the AIxDESIGN community grew increasingly disillusioned with the dominant discourse and narratives around AI: Stories told and retold by Big Tech companies like OpenAI, Microsoft, Google, and Meta, which dictate how the public imagines, talks about, and interacts with AI technologies.

For-profit products such as ChatGPT and the organizations behind them have come to define public perception not only of what AI is but also of what it can and will likely become. These narratives are not neutral; they serve the interests of the tech giants who seek to maximize growth, profit, and stakeholder value - the usual imperatives of capitalism.

‘Companies like OpenAI, Google, and Microsoft were quoted 20x more than the remaining top 20 orgs put together.’- Framing AI: Beyond Risk and Regulation, Rootcase, 2023

This landscape leaves little space for alternative visions to take root.

Taglines like ‘AI benefits all of humanity’ push forward underlying ideologies and beliefs, created to sway public opinion into believing that artificial general intelligence (AGI) not only exists, but will lead us to ‘a promised land’ and bring about ‘human salvation.’

‘Silicon Valley’s most powerful monopoly may be how we perceive technology.’

IMG: Ben Grosser’s redaction poetry version of Andreessen’s Techno-Optimist Manifesto

Silicon Valley’s vision for AI? It’s religion, repackaged, Sigal Samuel

💡 A recent paper by Timnit Gebru & Émile P. Torres traced the origins of some of these underlying ideologies advocating for AGI, bundling them under the TESCREAL acronym.

IMG: Source Unknown

At the same time, there is a lot of panic around AI. Fear-mongering headlines dominate the news, sounding alarms about whether AI will replace us or go rogue.

Not all fears are unfounded. Very real harms and risks - from AI displacement and bias to environmental impact and more - are justifiably fueling collective concerns.

Struggling to reconcile these stories - AI as the key to humanity’s salvation or its doom - public discourse often swings between two polarizing positions:

👍 with techno-optimist / hype / utopia

👎 and doomers / criti-hype / dystopia

Neither seems helpful to us. This binary framing of two warring visions is not fertile ground for constructive conversations. It traps us in false debates and fails to capture the nuanced and complex ways AI is already shaping our lives, in both beautiful and concerning ways.

AI isn’t coming in the future - it’s already here, influencing how we live, work, and connect with others every day. AI is a tool - excellent for certain tasks but limited in others. What matters most is who makes these tools, how they deploy them into the world, and how people use them.

Not resonating with either extreme, we try to practice a third position. One that exists in the in-between, acknowledges a plurality of (sometimes conflicting) truths and centers our agency within them.

‘There are “non-grief” ways of thinking through a philosophy of artificialized intelligence that are neither optimistic nor pessimistic, utopian nor dystopian.”- The Five Stages Of AI Grief, Benjamin Bratton, 2024

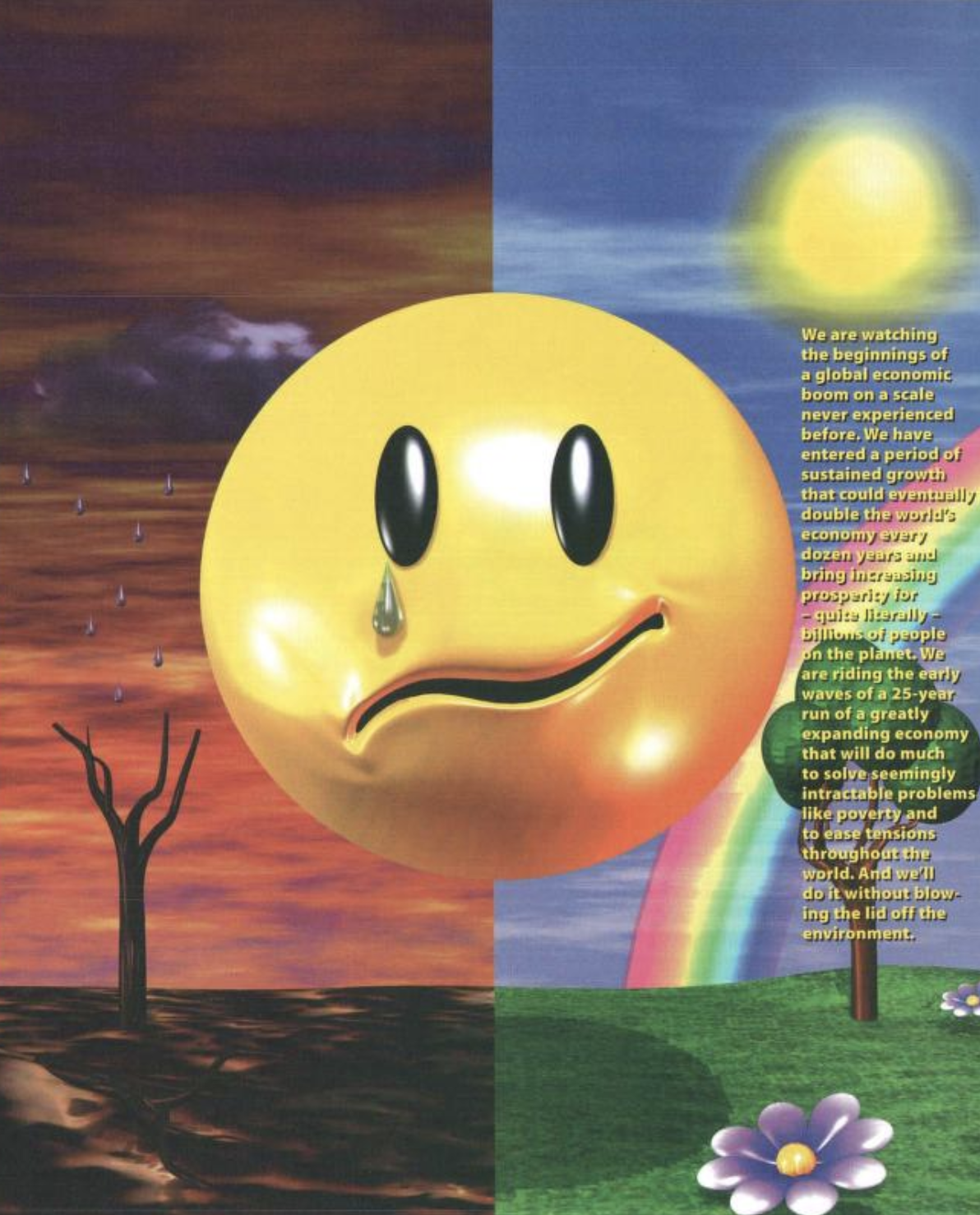

IMG: Source Unknown

IMG: Image to Audio Corruption 02 by Cristóbal Ascencio & Archival Images of AI + AIxDESIGN, 2024

IMG: Dreamscapes of Modernity Sociotechnical Imaginaries and the Fabrication of Power, Edited by Sheila Jasanoff and Sang-Hyun Kim → Dreamscapes of Modernity | Are.na

But why do the stories we tell about AI matter?

‘The narrative wars around AI aren’t just about words and concepts. The ways in which AI is framed and which narratives will stick in the popular imagination are deeply entangled with the ways in which the technical elements of AI will interweave with broader societal dynamics.’- Future Art Ecosystems Annual Briefing on Public AI, 2024, Serpentine Arts Technologies and the Creative AI Lab

Put simply, media coverage, along with propaganda and public discourse, shapes public perception. This influences not only how people understand AI (both experts and non-experts alike) but also how we imagine and build these technologies.

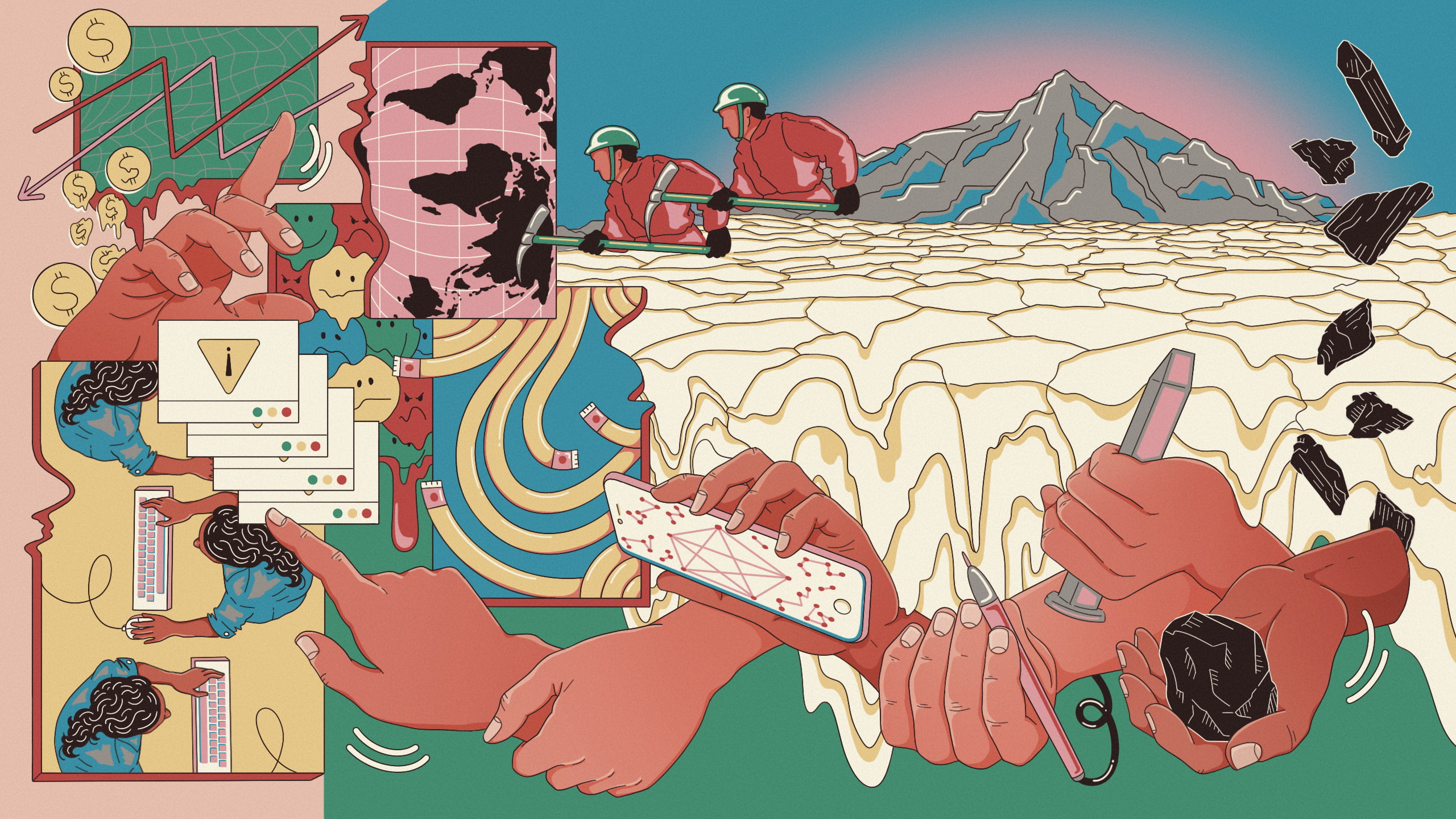

IMG: Labour/Resources, Clarote, 2023

💡 “sociotechnical imaginaries” is a term coined by Sheila Jasanoff and developed in close collaboration with Sang-Hyun Kim to describe how visions of scientific and technological progress carry with them ‘societal understandings of the possibilities, potentials and risks embedded in the technology’ and how this ‘plays a large role in influencing how AI is perceived and engaged in societies.’