White also pointed to several recent AWS announcements as examples of continued performance, including the general availability of Amazon Elastic Compute Cloud Capacity Blocks for machine learning, which runs on Nvidia's (NVDA) H100 GPUs.

Other highlights include its recent investment in generative AI startup Anthropic, Meta Platforms (META) bringing its Llama 2 large language model to Amazon Bedrock and the general availability of its Amazon Titan Embeddings LLM, among others.

The market is fixated on Nvidia’s superior AI chip, but one must acknowledge the broader data center opportunity as a whole to truly appreciate the bull case for Nvidia stock.

Investors are missing Nvidia’s product bundling sales strategy which is enabling the tech giant to optimally capitalize on the market opportunity ahead and impede competitors’ ability to take market share.

Similar to how Apple iPhone users upgrade to advanced iPhone models thanks to the stickiness of the services, Nvidia is locking enterprises into the Nvidia software ecosystem for the long term.

Nvidia's stock has surged by around 240% this year thanks to its leadership position in the generative AI revolution. The AI market opportunity is undoubtedly massive, and the AI-related growth story has only begun. Many investors seem to be fixated on Nvidia's superior AI chip, but it is also important to acknowledge the broader data center opportunity as a whole to truly appreciate the bull case for Nvidia stock. The tech giant has been deploying a great sales strategy, namely through selling HGX systems, that enables Nvidia to optimally capitalize on the market opportunity ahead and lock customers into the Nvidia ecosystem for the long term. Nexus maintains a 'buy' rating on Nvidia stock.

In our previous article on Nvidia, Nexus explained in a simplified manner the relation between Nvidia's hardware solutions and software services, and how Nvidia has successfully designed its chips in a manner that paves the way for massive software revenue growth prospects. Nvidia's market share in the AI chip market is estimated to be around 80%, benefitting from a large installed base upon which it can build a flourishing software business. Now in this article, we will delve into another important driver of Nvidia's financial performance, the sale of HGX systems, and how it fosters Nvidia's ability to capitalize on the market opportunity ahead.

In September 2023, Nvidia projected its total long-term annual market opportunity to reach $1 trillion but did not specify a target year for this estimate. Although the company did offer a breakdown of expected market size opportunities for specific segments.

Despite shares being near their all-time high, I think investors can still buy in currently. When adjusting for the beat and Q4 guidance, the stock is trading for about 27.5 times its next fiscal year's non-GAAP EPS. That number is actually lower than fellow large cap tech peer Microsoft (MSFT), which is growing much slower than Nvidia. I think it's fair to pay this amount for Nvidia, which could grow non-GAAP EPS by another 50% next year after growth this year of close to 250%. Margins don't even have to improve further, because the revenue growth driving more gross margin dollars combined with more cash now earning around 5% and or buybacks lowering the share count can really help the EPS number.

In terms of the three big chipmakers, Nvidia actually trades at a cheaper valuation than Advanced Micro Devices (AMD).

Though focusing particularly on the data center opportunity, which is Nvidia's largest segment and made up 80% of total revenue last quarter, the company's executives have emphasized how the high demand for HGX systems has been a key driver of revenue growth. On the Q3 FY2024 Nvidia earnings call, CFO Colette Kress shared:

"The continued ramp of the NVIDIA HGX platform based on our Hopper Tensor Core GPU architecture, along with InfiniBand end-to-end networking drove record revenue of $14.5 billion, up 41% sequentially and up 279% year-on-year."

So what is the HGX platform?

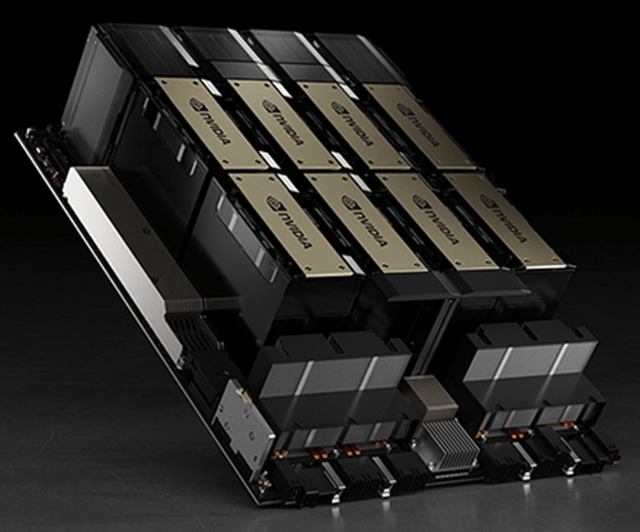

The HGX platform, also known as Nvidia's AI supercomputer, is built for developing, training, and inferencing generative AI models. It combines four or eight AI GPUs (such as A100s or H100s) together using Nvidia's networking solutions (Infiniband) and NVLink technology and also includes the NVIDIA AI Enterprise software platform.

Nvidia HGX supercomputer (Nvidia)

So essentially, instead of data center customers having to purchase various hardware components separately, Nvidia combines all the components needed for AI workloads into a single system. This is a great product sales strategy whereby Nvidia can cross-sell its other data center solutions, such as networking solutions, alongside the sales of its highly sought-after chips, as one big bundled solution.

In fact, on the Q2 FY2024 Nvidia earnings call, Kress had shared that: